Lobbying, Emotions, and Diamonds

How Fred Rogers can help us think about authenticity in the age of generative AI

I haven’t seen anything quite like the adoption of ChatGPT. It’s never really a surprise for me when my plugged-in friends talk about an up-and-coming tool or app. Roam, Notion, Superhuman, Endel, Telegram, and many more: their phones are full of abandoned apps that beg us to re-download them with their cute cloud with a download arrow icon after they have been mercilessly auto-deleted by the algorithm to save space.

But it’s Chat GPT’s usage by my normie friends that has peaked my interest. How does my friend’s dad who wouldn’t even consider taking about cryptocurrency bring it up by himself? How are people already trying to think about a new job type — prompt engineers? How are high school students I know already using it to apply to and get scholarships? It’s a Cambrian explosion of creativity. And more than a hint of trepidation about what is to come.

I’m not alone in these observations. But I do think there is something I haven’t seen considered elsewhere: how do we feel about being manipulated by AI?

And if we acknowledge that we don’t want to be manipulated by AI models, then what could the future of ensuring human “authenticity” look like?

I. Emotional manipulation: Pulling at our heartstrings

During a recent trip to Denver and Boulder, in what has become an annual ritual, I picked up The Best American Essays 20221, an anthology of essays selected by Alexander Chee. One of the essays was Ghosts by Vauhini Vara. Its premise is simple:

I had always avoided writing about my sister’s death. At first, in my reticence, I offered GPT-3 only one brief, somewhat rote sentence about it. The AI matched my canned language; clichés abounded. But as I tried to write more honestly, the AI seemed to be doing the same. It made sense, given that GPT-3 generates its own text based on the language it has been fed: Candor, apparently, begat candor. In the nine stories below, I authored the sentences in bold and GPT-3 filled in the rest.

It should be no surprise to anyone who has used ChatGPT that the stories generated were pretty good. There was the sorrow, guilt, and angst typical in these stories. If Vauhini has chosen one of these stories and tried to pass it off as her own to her editor, I’m not sure that anyone would be able to tell.

That got me thinking: how would I feel if other authors whose works I had spent a lot of time with used AI-generated text without telling me. It made me feel a bit uneasy.

Take the thought experiment to a qualitative extreme.

Consider you are at the end of a book like When Breath Becomes Air by Paul Kalanithi, and read these final lines that the dying author wrote to his infant daughter who was never going to see her father as a fully conscious human being:

When you come to one of the many moments in life where you must give an account of yourself, provide a ledger of what you have been, and done, and meant to the world, do not, I pray, discount that you filled a dying man’s days with a sated joy, a joy unknown to me in all my prior years, a joy that does not hunger for more and more but rests, satisfied. In this time, right now, that is an enormous thing.

I know for a fact that that particular paragraph has made some of the most stoic guys I know weep on airplanes.

How would they feel if on the inside flap of the dust jacket it said something like, “Paul Kalanithi is an AI large-language model developed in Guilin, China by a team of seven anonymous teenagers.”?

I know it wouldn’t sit well with me. Maybe even make me furious.

That’s because part of what we consume is the context of who the author is. When I read that book, I (at least subconsciously) thought about how Paul was a part of the Indian diaspora with its focus on delayed gratification and a general reluctance towards outwardly displays of emotion. So a paragraph like that comes not just with the power of the rhythm that Paul builds with the short phrases and the fact that we know that this is the last chapter of the book with the inevitable just around the corner, but also the fact that I could see part of myself in him.

How could a large-language model, however well conceived and advanced, possibly replicate that sense of belonging? Of being seen by others as you see yourself? Whatever the wiz kids in Silicon Valley, Shenzhen, or Tel Aviv come up with next, I don’t think it’s possible to recreate that.

II. Political manipulation: Increasing the noise to signal ratio

Let’s move from emotional manipulation at a personal level to mass manipulation. Living in Washington D.C., the possibility of this is never too far out of my mind.

This story in the New York Times talked about using LLMs as lobbyists. There was also an academic paper about how effective this use case can be. It found that:

LLMs [Large Language Models] can simulate many different personas and be encouraged to take on many different approaches to tasks.

Read: we can create an endless volume of letters, voicemails, and other materials to persuade Congress and other stakeholders that there is wide agreement or disagreement about any topic. Basically, raise the level of noise about a topic2.

As one of the potential downsides, the authors concluded:

AI lobbying activities could, in an uncoordinated manner, nudge the discourse toward policies that are unaligned with what traditional human-driven lobbying activities would have pursued. This could occur through the more formal channels of written letters to regulators and congresspeople, or through the informal channels of influencing legislator’s perceptions of public opinion.

And that:

This does notimply the existence of a strongly goal-directed agentic autonomous AI. Rather, this may be a slow drift, or otherwise emergent phenomena.

If I have some confidence in our ability to be protective about the arts, I have little hope that we can maintain that level of integrity in other domains. At the end of the day, lobbying is an arms race and you cannot ask one side (or both) to put away something that can give them a massive advantage towards their end outcomes.

Pandora’s box has been opened. And it can’t be closed.

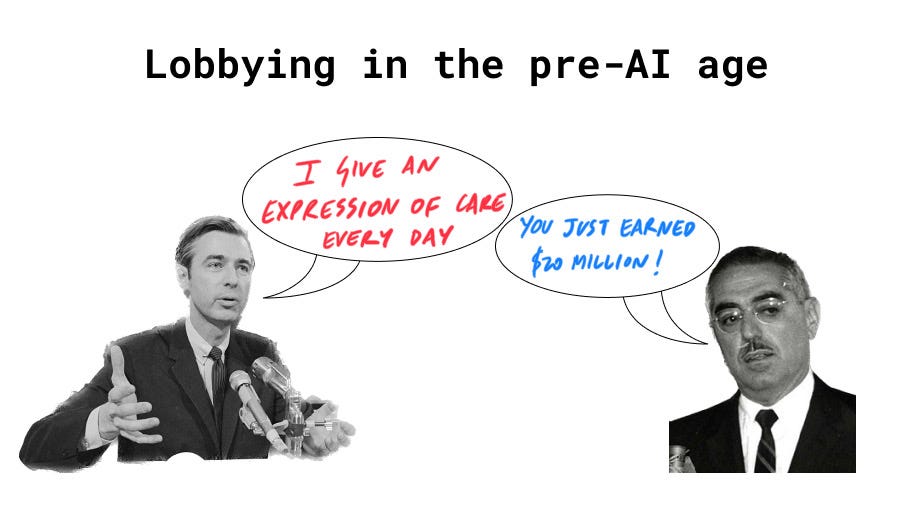

Compare this approach to lobbying to this testimony by Fred Rogers in May, 1969. Rogers went in front of the Senate Subcommittee on Communications about preventing the gutting of $20M of funding for The Corporation of Public Broadcasting which in turn funds PBS:

And this is what — This is what I give. I give an expression of care every day to each child, to help him realize that he is unique. I end the program by saying, “You’ve made this day a special day, by just your being you. There’s no person in the whole world like you, and I like you, just the way you are.” And I feel that if we in public television can only make it clear that feelings are mentionable and manageable, we will have done a great service for mental health. I think that it’s much more dramatic that two men could be working out their feelings of anger — much more dramatic than showing something of gunfire. I’m constantly concerned about what our children are seeing, and for 15 years I have tried in this country and Canada, to present what I feel is a meaningful expression of care.

Fred Rogers gives a couple of more examples, at which point Senator Pastore of Rhode Island who was the Chairman of the Subcommittee says:

Well, I’m supposed to be a pretty tough guy, and this is the first time I’ve had goose bumps for the last two days.

And then he says:

I think it’s wonderful. I think it’s wonderful. Looks like you just earned the 20 million dollars.

If that doesn’t move you, I don’t know what will. I’ve never quite seen anything like this: a public official changing his mind on national television in an instant by a thoughtful communicator.

How do we think about protecting the power of that from the noise created by AI-generated text?

III. Diamonds Are Forever (whether they are real or fake)

In another conversation, I asked a friend of mine how she would feel if she got a lab-grown diamond ring for her engagement. Her response?

Absolutely no way!

My understanding is that there is no structural difference between lab grown and naturally-occurring diamonds. But this is an understandable reaction. While diamonds are defined as a solid form of the element carbon with its atoms arranged in a crystal structure called diamond cubic, the colloquial meaning of the term is very much about naturally-occurring gems.

However culturally steeped that colloquial definition is, it’s an actual revealed preference that only about 10% of engagement rings in the US use lab-grown diamonds3. It’s tough to argue against the fact that even the most educated people go for the authentic version of diamonds with its pitfalls of opaque sourcing and higher costs.

Could that be a way we look at generative AI? Diamonds, both lab-grown and naturally-occurring, have a rating system based on the 4Cs: Color, Clarity, Cut, and Carat Weight.

Could we, in the future, adopt a similar system for writing, campaigning, and other domains? Next to a movie’s IMDB rating and Tomatometer rating will be an AI-ness rating — to what extent a movie was conceived by something that was not human?

Maybe there will be different dimensions of how augmented a piece of media is:

Promptness: How often AI was used to fix writer’s block?

Creation: How often AI was used to create entire sections?

Post-production: How much post-production fixing was done without human direction?

Rough analogues already exist. People talk about how the stunts in a movie like Top Gun: Maverick were “real” and not done in post-production using CGI. We know that these “real” stunts are more dangerous and more expensive, but we insist at these being a higher form.

Each piece of media would have embedded metadata into these augmentation characteristics set by some standards board.

IV. Fin

I think we’ll continue to talk more about AI and its implications on this newsletter and elsewhere.

What’s most interesting to me is how the structures (commute becoming ever cheaper, but potentially plateauing in its decreasing cost curve) and the agents (Satya Nadella putting down his foot and making a directional bet on the future of Microsoft) interact towards the future that is being created. There is incredible path dependence in any of the key technologies that we have today, and I doubt that AI will be any different.

Until the next one,

Sid

At the wonderful Tattered Cover Bookstore at Denver’s Union Station.

As if more noise was needed.